Need fast iterations with hot reload, debugging, and collaboration over k8s envs?

At the heart of every environment is a set of services which run on a set of images. These images are sometimes supplied from open source or external projects, but are often built as part of the development process. For building images, we at Raftt have selected [BuildKit](https://github.com/moby/buildkit). In the following article I’ll cover why we chose BuildKit, the problem we discovered, and the resolution in the upstream open source project.

But first, a bit about BuildKit itself: BuildKit is a widely used open source project that allows building container images. It is commonly used as the backend of the `docker buildx` command, and as a cloud-deployed service for remote image building. At Raftt we use it in the latter form - BuildKit is what powers our in-cluster image building. As we are always trying to improve the user experience for people using our product, we dedicated time to improving the image building performance. To that end, we integrated BuildKit with [Jaeger](https://www.jaegertracing.io/), an OpenTelemetry trace aggregator / visualizer, and that’s when we started seeing panics from our BuildKit pods 😟.

## But first - why tracing?

In order to improve the performance of any process, the first thing we need is data. Tracing allows gathering very rich data and visualizing it in a way that makes performance bottlenecks easily identifiable. Container image building is a complex process with many steps, each of which can perform external fetches from a registry, disk I/O or computationally intensive commands. This is especially relevant for languages like Node, where a command like `yarn install` can fetch GBs of data, and a command like `yarn build` can manipulate thousands of files. Not only can each of these stages take minutes, they create many files in the image filesystem, leading to huge layers that take even more minutes to compress and upload. In our case - each minute delay is a minute where a developer is idle, waiting for their dev environment to come up.

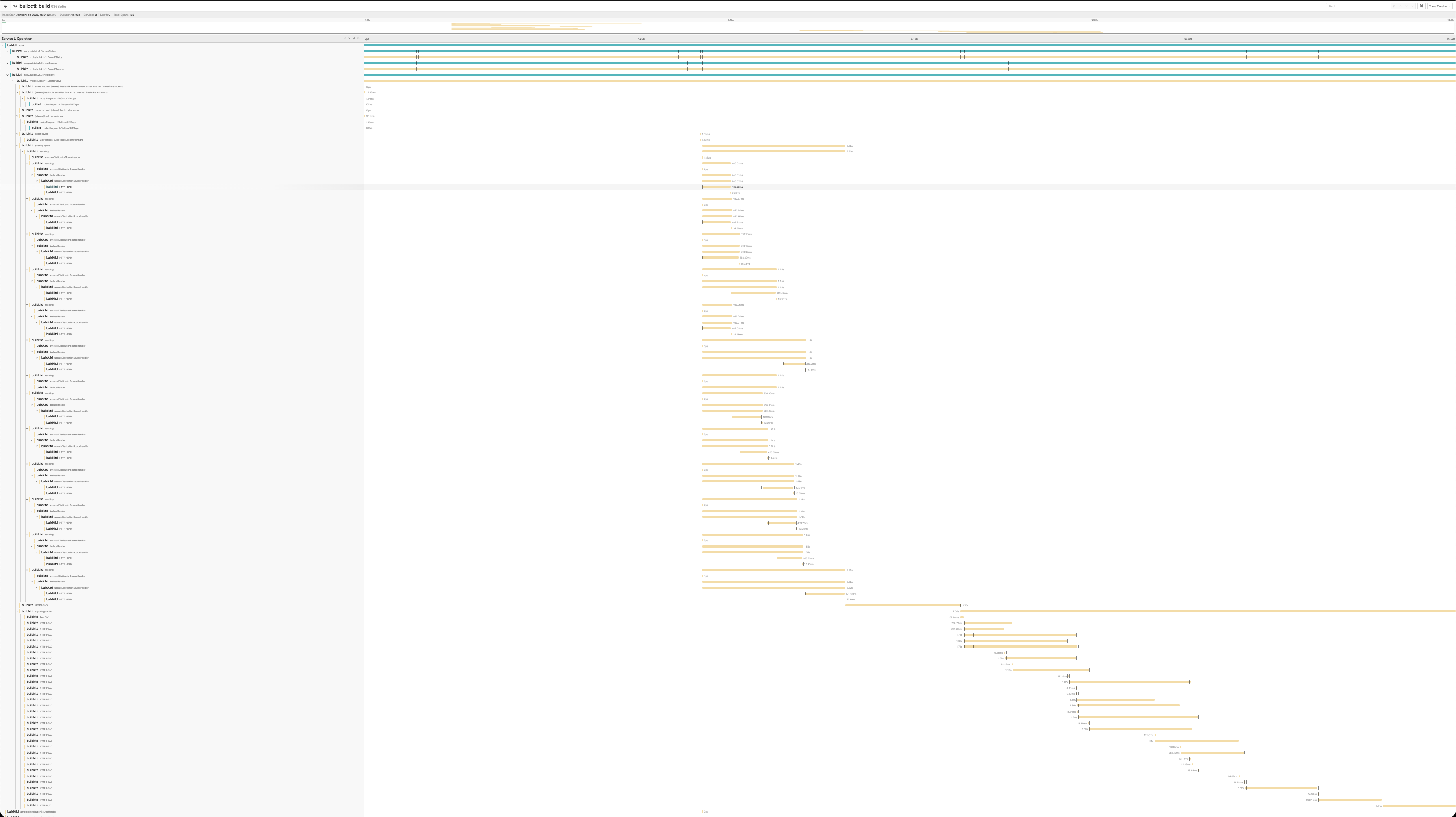

While looking through the build logs can help with understanding the process, there is limited visibility into certain stages, and it can be difficult to understand what is the root cause for particular build steps being slow. Luckily, BuildKit have integrated the OpenTelemetry SDK, allowing it to export traces to external services that aggregate, process and visualize them. We chose Jaeger as the component that will perform all of these, and deployed it to the cluster alongside BuildKit.

The resulting traces look something like this (zoomed out so we can see it all):

Right off the bat, there are some interesting things -

- What is the ~7 second delay in the beginning?

- What are the processes at the end that appear to be almost sequential?

And those are great topics for future blog posts 🙂

## Panik!

Everything seemed to work well, until several hours later when we saw that in our development cluster, where we [dogfood](https://en.wikipedia.org/wiki/Eating_your_own_dog_food) our own product extensively, we started getting build failures. Looking into them, we saw that BuildKit was crashing! It was nice enough to print a stack trace:

```

panic: runtime error: slice bounds out of range [3483:3471]

goroutine 247642 [running]:

bytes.(*Buffer).Write(0xc003cf9a88, {0xc000c8423d, 0xeda7da3fd, 0x40323e})

/usr/local/go/src/bytes/buffer.go:174 +0xd6

**go.opentelemetry.io/otel/exporters/jaeger**/internal/third_party/thrift/lib/go/thrift.(*TCompactProtocol).writeVarint32(0xe28c8a, 0x158640)

/src/vendor/go.opentelemetry.io/otel/exporters/jaeger/internal/third_party/thrift/lib/go/thrift/compact_protocol.go:691 +0x6b

go.opentelemetry.io/otel/exporters/jaeger/internal/third_party/thrift/lib/go/thrift.(*TCompactProtocol).WriteI16(0xc0004d8280, {0xc000a74c30, 0x8}, 0xd340)

/src/vendor/go.opentelemetry.io/otel/exporters/jaeger/internal/third_party/thrift/lib/go/thrift/compact_protocol.go:307 +0x26

...

github.com/moby/buildkit/control.(*Controller).Export(0xc000c4af00, {0x154cb20, 0xc007b022c0}, 0x75e363b52d889d26)

/src/control/control.go:201 +0x97

**go.opentelemetry.io/proto/otlp/collector/trace/v1._TraceService_Export_Handler.func1**({0x154cb20, 0xc007b022c0}, {0x125c620, 0xc000e755c0})

/src/vendor/go.opentelemetry.io/proto/otlp/collector/trace/v1/trace_service_grpc.pb.go:85 +0x78

github.com/moby/buildkit/util/grpcerrors.UnaryServerInterceptor({0x154cb20, 0xc007b022c0}, {0x125c620, 0xc000e755c0}, 0xc0005a8680, 0x109a6c8)

/src/util/grpcerrors/intercept.go:14 +0x3d

github.com/grpc-ecosystem/go-grpc-middleware.ChainUnaryServer.func1.1.1({0x154cb20, 0xc007b022c0}, {0x125c620, 0xc000e755c0})

/src/vendor/github.com/grpc-ecosystem/go-grpc-middleware/chain.go:25 +0x3a

...

```

We immediately realized it was due to the tracing (due to the bold portions above) and without knowing too much about the internals of BuildKit *or* the Open Telemetry Jaeger exporter library, I created an [issue](https://github.com/open-telemetry/opentelemetry-go/issues/3063) in the Open Telemetry Github repository. Friendly maintainers noted that the exporter library is not thread-safe, and *might* be being used from BuildKit in a way that doesn’t take this into account.

Moving the [discussion to the BuildKit](https://github.com/moby/buildkit/issues/3004) repository unfortunately didn’t yield any responses. I attempted to fix it with a brute-force method, adding a mutex around the call that leads to the panic’s stack trace, and deployed it in our cluster. Problem solved - 24 hours passed without a single panic.

```go

func (c *Controller) Export(ctx context.Context, req *tracev1.ExportTraceServiceRequest) (*tracev1.ExportTraceServiceResponse, error) {

if c.opt.TraceCollector == nil {

return nil, status.Errorf(codes.Unavailable, "trace collector not configured")

}

**c.exportSpansLock.Lock()

defer c.exportSpansLock.Unlock()**

err := c.opt.TraceCollector.ExportSpans(ctx, transform.Spans(req.GetResourceSpans()))

if err != nil {

return nil, err

}

return &tracev1.ExportTraceServiceResponse{}, nil

}

```

## Fixing in upstream

So, I opened a PR - https://github.com/moby/buildkit/pull/3058. I quickly got feedback from [Tõnis Tiigi](https://github.com/tonistiigi), the primary maintainer of [moby/buildkit](https://github.com/moby/buildkit). He explained that this solution isn’t correct - while it guards against concurrent usage of the exporter in some of the flows, it misses others.

At this point the conversation was paused, as 4 days later my son Eitan was born 👶, and I was a bit busy 🙂. In December I came back to this and updated the PR, encapsulating the exporter in a struct that implements the same interface, guaranteeing thread safety and making it super easy to integrate:

```go

// threadSafeExporterWrapper wraps an OpenTelemetry SpanExporter and makes it thread-safe.

type threadSafeExporterWrapper struct {

mu sync.Mutex

exporter sdktrace.SpanExporter

}

func (tse *threadSafeExporterWrapper) ExportSpans(ctx context.Context, spans []sdktrace.ReadOnlySpan) error {

tse.mu.Lock()

defer tse.mu.Unlock()

return tse.exporter.ExportSpans(ctx, spans)

}

func (tse *threadSafeExporterWrapper) Shutdown(ctx context.Context) error {

tse.mu.Lock()

defer tse.mu.Unlock()

return tse.exporter.Shutdown(ctx)

}

```

Following a couple small fixes, this was merged and cherry-picked into v0.11 of buildkit!

## Contribute to open source!

There is something very satisfying about making an improvement, however small, in a project used by thousands of people every day. While it may sometimes take some additional effort, it is vastly preferable to maintaining a fork. We continuously look for opportunities to contribute to open source at Raftt, and have contributed to [buildkit](https://github.com/moby/buildkit), [syncthing](https://github.com/syncthing/syncthing), [dlv](https://github.com/go-delve/delve), and other popular projects.

Stop wasting time worrying about your dev env.

Concentrate on your code.

The ability to focus on doing what you love best can be more than a bottled-up desire lost in a sea of frustration. Make it a reality — with Raftt.