Need fast iterations with hot reload, debugging, and collaboration over k8s envs?

Hi all! In this blog post, I’ll guide you through creating your own *Kube or Fake?* mini-game using ChatGPT. For those of you who joined late, *Kube or Fake?* is a Kubernetes/ChatGPT mini-game created by Raftt, where the player must distinguish between real Kubernetes terms and fake ChatGPT-generated ones (and it all happens live 💪). If you haven’t already tried it, [kick back and enjoy](https://kube-or-fake.raftt.io).

First, we will get familiar with the ChatGPT API and see how we can use it to generate text. We will then see how we can integrate it into a small funky app, wrap it nicely enough, and publish it for the whole world! (or a couple of friends).

# Step 1 - Using ChatGPT to Generate K8s Terms

The most important part of our mini-game is having ChatGPT generate Kubernetes terms (either real or fake). Since we want to use ChatGPT to power our app, let’s have it return the result in a structured syntax:

```json

{

"term": <the generated term>,

"isReal": <*True* if the term is real, else *False*>,

"description": <description of the term>

}

```

## Attempt #1: Making ChatGPT flip a coin

Our initial approach was letting ChatGPT decide whether the generated Kubernetes term should be real or fake, using a prompt as follows:

```python

import openai

def generate():

prompt = """You are a Kubernetes expert, and also a game master.

You are 50% likely to respond with a real Kubernetes term (either a resource kind or field name), and 50% likely to make up a fake term which resembles a real one, in order to confuse the player.

Your response syntax is:

{

“term”: your generated term,

“isReal”: true if the term is real, else false,

“description”: a short description of the term. If it’s fake, don’t mention it

}"""

messages = [

{

"role": "system",

"content": prompt

}

]

return openai.ChatCompletion.create(

model="gpt-3.5-turbo-16k-0613",

messages=messages

)

```

Notice the API call `openai.ChatCompletion.create(...)` which requires choosing a specific GPT model to use, and the structure of messages we pass to it.

When getting the response back, we retrieve its content as follows:

```python

import json

response = generate()

content = response['choices'][0]['message']['content']

term = json.loads(content) # Since we instructed ChatGPT to respond with a JSON string

```

Overall, this attempt worked pretty well, however the perceived probability of generating real terms vs. fake ones wasn’t 50/50. After running the same prompt for a few times, it became pretty obvious that ChatGPT was a bit biased towards real Kubernetes terms. We can overcome this by either:

- Fine-tuning the probabilities in the prompt (****e.g.**** 30% real / 70% term) such that the actual probability is closer to 50/50.

- Extracting the coin flip to the code, and writing a different prompt for each side of the coin.

## Attempt #2: Flip the coin before invoking ChatGPT API

We’ll need two different prompts for this approach, depending on the coin flip result.

**Prompt #1**

```

You are a Kubernetes expert.

Generate a real Kubernetes term, which is either a resource kind or a field name.

Response syntax:

{

“term”: your generated term,

“isReal”: true,

“description”: a short description of the term

}

```

**Prompt #2**

```

You are a Kubernetes expert.

Generate a random, fake Kubernetes term, which resembles a resource kind or a field name.

An average software developer should not be able to immediately realize this is a fake term.

Make sure that this is in fact, not a real Kubernetes term.

Response syntax:

{

“term”: your generated term,

“isReal”: false,

“description”: a short description of the term, don’t mention it is fake

}

```

We’ll make the necessary adjustments to our code, and add the coin flip logic:

```python

import openai

import random

def generate():

prompts = [REAL_PROMPT, FAKE_PROMPT]

random.shuffle(prompts)

messages = [

{

"role": "system",

"content": prompts[0]

}

]

return openai.ChatCompletion.create(

model="gpt-3.5-turbo-16k-0613",

messages=messages

)

```

This approach worked **much** better, and the generated probability was lot closer to 50/50 😛

## Attempt #3: Adding a 3rd side to the coin

The previous approach was pretty good output-wise, and met our requirements almost perfectly. Now, the only thing missing from this game is the “oh-my-god-chatgpt-is-so-random” funny part!

To spice it up just a bit, let’s add a *third* prompt and let ChatGPT run wild:

```

You are a Kubernetes expert.

Generate a random, obviously fake Kubernetes term, which resembles a resource kind or a field name.

An average software developer should be able to immediately realize this is a fake term,

Make sure that this is in fact, not a real Kubernetes term.

Extra points for coming up with a funny term.

Response syntax:

{

“term”: your generated term,

“isReal”: false,

“description”: a short description of the term, don’t mention it is fake

}

```

We can either keep the prompts equally likely (~33% chance for each), or play with the probability values as we see fit. “Prompt engineering” often leads to add-on sentences like “Make sure that this is in fact, not a real Kubernetes term”, because the model makes many mistakes and this helps keep it on track.

### Deploying the term generator as an AWS Lambda

We’ll soon get to the code of the game itself, but first let’s publish our term-generating code so it is publicly available. For this purpose, let’s use AWS Lambda.

We want the Lambda handler to do the following:

- Flip a (3-sided 🙃) coin (using `random`)

- Depending on the flip result, either:

- Generate a real Kubernetes term

- Generate a not-so-obviously-fake Kubernetes term, to make the game a bit more difficult

- Generate an obviously-fake Kubernetes term, to make this game a bit more funny

- Respond with the JSON generated by ChatGPT

I’ll be using Python as my Lambda runtime, but this is easily achievable with other runtimes as well.

The general structure of our Lambda code is as follows:

```python

import json

import openai

import os

import random

openai.api_key = os.getenv("OPENAI_API_KEY")

OPENAI_MODEL_NAME = os.getenv("OPENAI_MODEL_NAME")

def lambda_handler(event, context):

prompts = [REAL_PROMPT, FAKE_PROMPT, OBVIOUSLY_FAKE_PROMPT]

random.shuffle(prompts)

response = generate(prompts[0])

body = json.loads(response['choices'][0]['message']['content'])

return {

'statusCode': 200,

'headers': {

"Content-Type": "application/json",

"X-Requested-With": '*',

"Access-Control-Allow-Headers": 'Content-Type,X-Amz-Date,Authorization,X-Api-Key,X-Requested-With',

"Access-Control-Allow-Origin": '*',

"Access-Control-Allow-Methods": 'GET, OPTIONS',

"Access-Control-Allow-Credentials": True # Required for cookies, authorization headers with HTTPS

},

'body': body

}

```

We’ll cover two easy ways to deploy the lambda code to AWS. Use whichever is more convenient for you.

## Method 1: AWS Console

One approach to creating the Lambda function is via AWS Console. Using this approach we don’t bind the ChatGPT logic to our game repo, which allows us to quickly make changes to the Lambda without having to commit, push, and re-deploy our website.

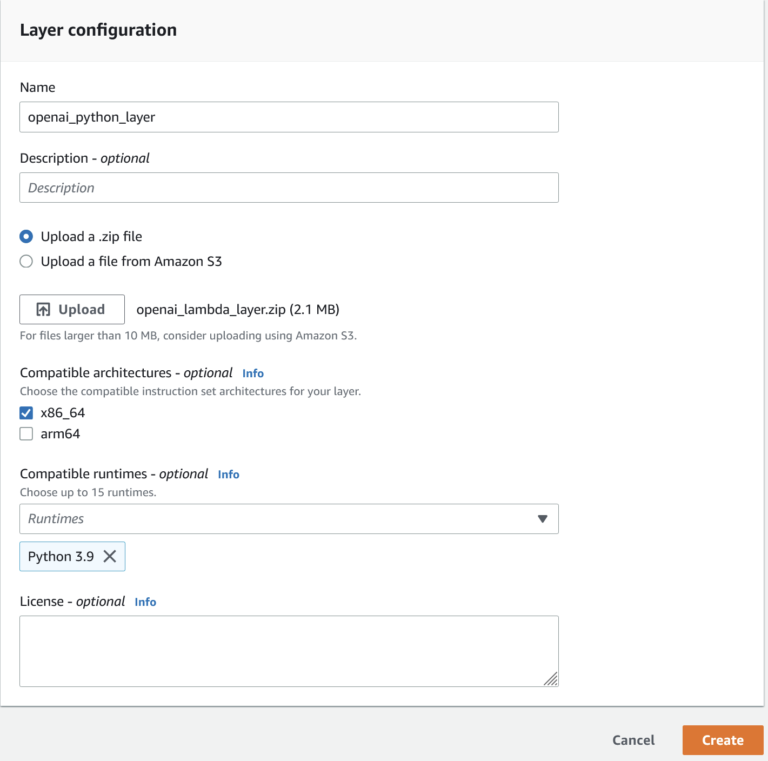

### Creating the OpenAI Lambda Layer

AWS Lambda Layers are a way to manage common code and libraries across multiple Lambda functions. A layer is made up of code and libraries that are organized into a package that can be reused across multiple functions.

But why do we need them? Well, unfortunately, AWS Lambda’s Python runtimes do not include the `openai` package by default. Hence, we must provide it as a layer:

1. Install the `openai` package locally, in a folder called ‘python’:

```bash

mkdir python

pip install openai -t python

```

2. After the installation is complete, zip the folder:

```bash

zip -r openai.zip python

```

3. Create the Lambda layer by going to the Lambda console → Layers → Create Layer. Provide a name for the layer, and select “Upload a .zip file”. Under “Compatible runtimes”, select Python 3.9. The final screen should look like this:

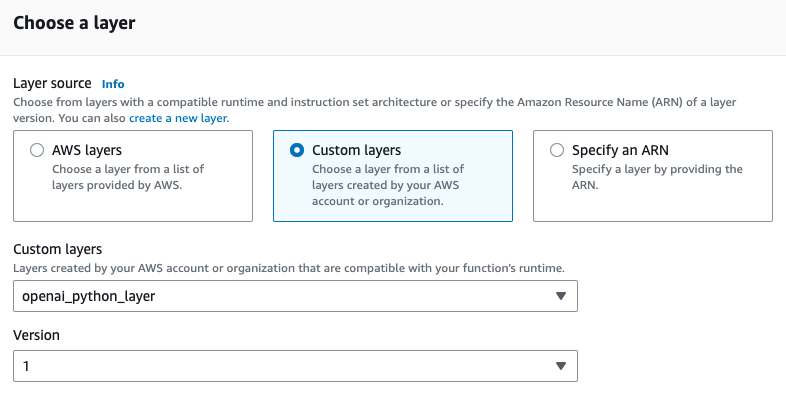

### Creating the Lambda Function

Now we’re ready to create the actual Lambda! Go to Functions → Create Function, and choose Python 3.9 as your runtime. The code editor should come up shortly.

To use our newly created layer, click Layers → Add a layer. Choose “Custom layers” as the source and pick the layer from the dropdown. When finished, click “Add”.

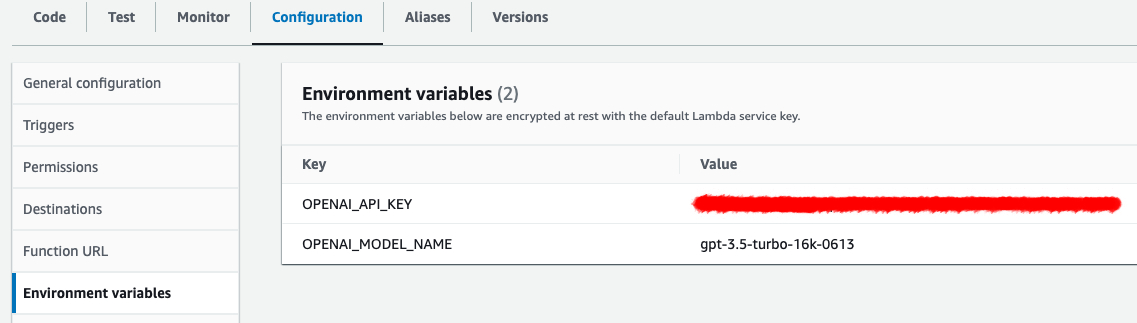

Next, we’ll define our environment variables. Go to Configuration → Environment variables and set the following env vars:

You can use any model you’d like, I’ve chosen **gpt-3.5-turbo-16k-0613**

Now we’re ready to code! Go to “Code”, open up the web IDE and paste the code snippet above. Click “Deploy” to publish the function. You can set up a function URL by going to Configuration → Function URL.

## Method 2: SAM

Another approach to AWS Lambdas is using [SAM](https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/install-sam-cli.html), which allows us to deploy Lambdas either locally or to our AWS account.

### Writing the Lambda handler

We’ll create a file `lambda/lambda.py` in our repository, and have the handler code written there.

### Creating a local Lambda layer

In order to create the `openai` layer locally, we should install it as follows:

```bash

pip install -r requirements.txt -t libs/python

```

### Creating a CloudFormation template

To deploy the Lambda, we first must set up a [CloudFormation](https://aws.amazon.com/cloudformation/) template in our project root directory, named `template.yml`:

```yaml

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Resources:

OpenAILambdaLayer:

Type: AWS::Serverless::LayerVersion

Properties:

LayerName: openai

ContentUri: libs

GenerateKubernetesTermFunction:

Type: AWS::Serverless::Function

Properties:

Environment:

Variables:

OPENAI_API_KEY: << replace >>

OPENAI_MODEL_NAME: << replace >>

CodeUri: lambda/

Handler: lambda.lambda_handler

Runtime: python3.10

Timeout: 10

FunctionUrlConfig:

AuthType: NONE

Events:

GenerateTerm:

Type: Api

Properties:

Path: /generate

Method: get

Layers:

- !Ref OpenAILambdaLayer

Outputs:

GenerateKubernetesTermFunction:

Value: !GetAtt GenerateKubernetesTermFunction.Arn

GenerateKubernetesTermFunctionIAMRole:

Value: !GetAtt GenerateKubernetesTermFunctionRole.Arn

GenerateKubernetesTermFunctionURL:

Value: !GetAtt GenerateKubernetesTermFunctionUrl.FunctionUrl

```

The template above defines the resources that will be deployed as part of the CloudFormation stack:

- Lambda function + layer

- IAM Role associated with the Lambda function

- API Gateway

From here we can deploy the Lambda function either locally, or to AWS.

### Local Lambda deployment

The Lambda can be run locally with `sam`:

```bash

sam local start-api

```

This command starts a server running in `localhost:3000`. The command output should look like this:

```

Mounting GenerateKubernetesTermFunction at <http://127.0.0.1:3000/generate> [GET]

You can now browse to the above endpoints to invoke your functions. You do not need to restart/reload SAM CLI while working on your functions, changes will be reflected instantly/automatically. If you used sam

build before running local commands, you will need to re-run sam build for the changes to be picked up. You only need to restart SAM CLI if you update your AWS SAM template

2023-07-20 11:58:51 WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on <http://127.0.0.1:3000>

2023-07-20 11:58:51 Press CTRL+C to quit

```

When the Lambda is invoked via `localhost:3000/generate`, some more logs are shown:

```

Invoking lambda.lambda_handler (python3.10)

OpenAILambdaLayer is a local Layer in the template

Local image is up-to-date

Building image.....................

Using local image: samcli/lambda-python:3.10-x86_64-b22538ac72603f4028703c3d1.

Mounting kube-or-fake/lambda as /var/task:ro,delegated, inside runtime container

START RequestId: b1c733b3-8449-421b-ae6a-fe9ac2c86022 Version: $LATEST

END RequestId: b1c733b3-8449-421b-ae6a-fe9ac2c86022

REPORT RequestId: b1c733b3-8449-421b-ae6a-fe9ac2c86022

```

*Note:* You may be requested to provide your local machine credentials to allow `sam` interacting with your local docker daemon.

Be aware that the Lambda docker image will be built upon each invocation, and that there is no need to re-run `sam local start-api` when making changes to the Lambda code (changes to `template.yml` *do* require a re-run though).

### Deploying to AWS

We do this using `sam` as well:

```bash

sam deploy

```

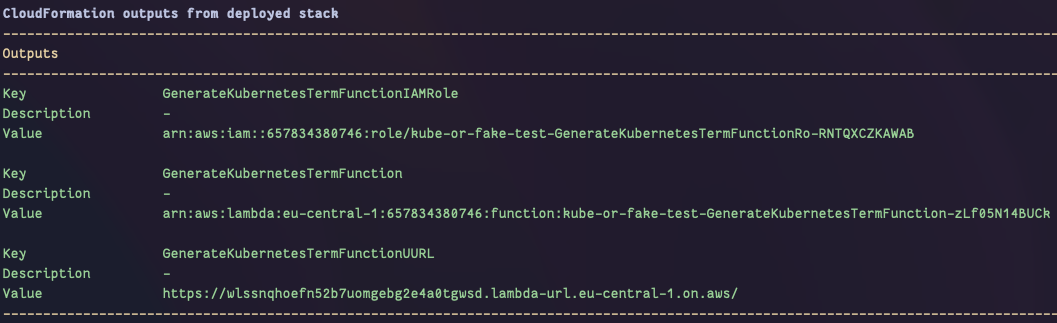

Follow the command's output to see where your new Lambda is created (`GenerateKubernetesTermFunctionURL`):

# Step 2 - Getting JSy With It

Our generator’s already up, all we have to do is create the game’s UX.

There are many ways to implement this, but for the purpose of this guide I’ll be focusing on the raw JS script we’ll be using.

### Generate a Term by Invoking Lambda

We want the browser to make the call to our Lambda and generate a term. This can be done with `fetch`:

```js

const GENERATOR_URL = "{your generated function url}";

async function generateWord() {

const response = await fetch(GENERATOR_URL);

return await response.json();

}

```

## Implement “Guess” Logic

We want to show a result according to the user’s choice (”KUBE” or “FAKE”).

Here’s the relevant code, assuming we’ve created the buttons in the HTML already:

```js

function checkGuess(guess) {

const correctGuess = (guess === word.isReal);

if (correctGuess) {

// user is correct - show good message

} else {

// user is wrong - show bad message

}

if (word.isReal) {

// show description

document.getElementById("description").innerHTML = word.description;

} else {

document.getElementById("description").innerHTML = `${word.term} is an AI hallucination.`;

}

}

document.getElementById("game-board").addEventListener("click", (e) => {

const targetClass = e.target.classList;

if (targetClass.contains("kube-button")) {

checkGuess(true);

} else {

checkGuess(false);

}

});

```

And… that’s basically it! Of course you can add a lot more (score-keeping, animations, share buttons, etc.) but that’s completely up to you.

This may be the place to note that there are all kinds of fancy UI libraries for web. React, Angular, Vue, … As you might have been able to tell from the website itself, we know none of these. 😄

# Step 3 - Deploy to GitHub Pages

We want the game to be publicly accessible, right? Let’s use GitHub pages to make it work. In your repo, go to Settings → Pages. Select a branch to deploy from, and choose your root folder as the source → Click “Save”. A workflow called “pages build and deployment” should start instantly:

This workflow will now be triggered by every push to your selected branch!

# Wrapping up

This was a blast to create, and [I hope] to play with. Don’t forget to share your results - we’d love to see forks of the game with changed UX or logic 🙂

Check out all the code in the repo - [https://github.com/rafttio/kube-or-fake](https://github.com/rafttio/kube-or-fake).

Stop wasting time worrying about your dev env.

Concentrate on your code.

The ability to focus on doing what you love best can be more than a bottled-up desire lost in a sea of frustration. Make it a reality — with Raftt.

.png)